Generating Noises with Pure Data

Procedural Audio in Unity with Pure Data

Why? (aside from being cool)

In Two Dots, each time we release a new theme (every two or three app updates), we not only pick a new set of board and background colors, we also make a new set of sound effects. That means with each new theme, we need to generate, organise, and pack at least 40 new SFX (20 dot connects and 20 square connects of different lengths). Though our initial concern was file size, it turned out that rendering these was a huge overhead for our in house composers. Although each sound is essentially a different note (or chord) on the synth instrument, they need to be generated multiple times to get a clean and level render for Unity to import. They probably haven’t forgiven us for the time we needed them to re-render all the worlds at a higher volume yet… Generating these procedurally seemed like a good solution. If each new theme meant a new ‘instrument’ to define what the connects should sound like, the game at run time just needs to load that up, then play whatever note or chord is needed. Now we just needed a way to make these instruments, and teach Unity to play them.

Coding an instrument

One option was always to write an audio generator for Unity ourselves. Since you can directly manipulate the audiostream, we could make a C# script that can play some simple tones & add some parameters to tweak for each theme. Just a sine wave at first, but OK we should allow for multiple oscillators, then I guess a LFO is vital, oh but what about different waveforms, and we really should allow some reverb, ah and we need filters! and… We actually managed to make a prototype of this, but quickly realised how limiting it would always be, when the composers are used to fancy audio software and a studio full of vintage analogue synths.

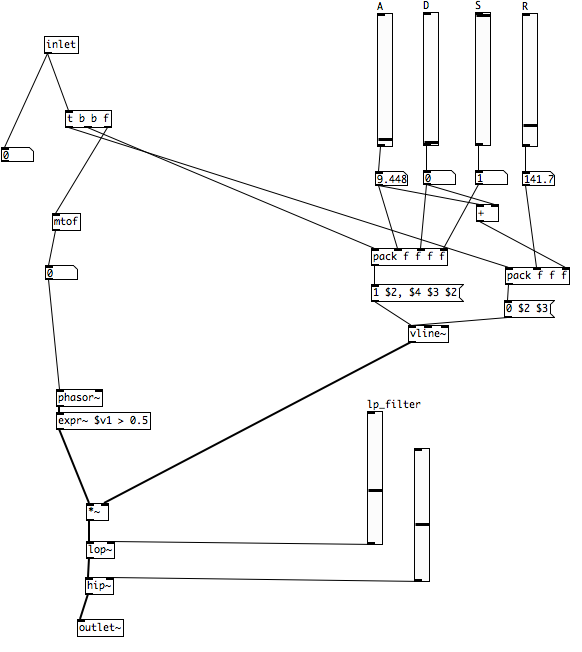

As always, you shouldn’t do work that someone else has already done, they’re probably better than you anyway. In our case, those better people have made Pure Data. Pure Data (PD) is a visual programming language, similar to (and started by the same author as) Max/MSP. By making graph-like diagrams called ‘patches’, you can easily generate audio with built in oscillators, filters and effects. If each theme was a new patch instrument, we’d just need a way to make it play whatever notes we needed it to at run time.

Teaching it to Unity

LibPD is another open source project that lets you embed Pure Data’s audio synthesis code as a native library on a wide range of platforms. So we knew there was at least theoretically a way to play patches on a mobile device at runtime. It turned out there were already a couple solutions for using LibPD with Unity, but each had a significant limitation. Some couldn’t run on mobile platforms (aside from a known problem for Android, but more on that later), and the ones that could were all written for the Unity 4.x audio system. This legacy system still worked, but relied on us funnelling all audio output from libpd (native) to a Unity thread (C#/OnAudioFilterRead) back to native (fmod) to be played. For performance and latency reasons, we couldn’t use this in a game.

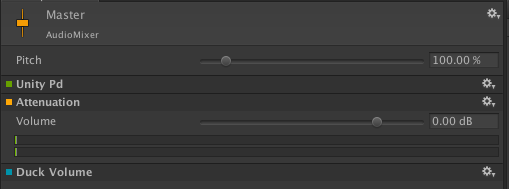

But, don’t despair, there is always another way. And that way is Unity 5.x’s Native Audio Plugin framework. This framework gives us a way to modify (or in our case, fully synthesise) an audio frame in native code, and pass it back to Unity to be played, all on the native audio thread. Now all we needed to do was make a wrapper that uses Unity’s plugin SDK, but talks to LibPD to generate the audio and send messages to/from Unity C#.

We call that wrapper UnityPD, and we now use to generate audio in Dots & Co. Each theme is a patch made in Pure Data by the audio team (actually a folder including some utility patches as well as the ‘instrument’ patch). When loading a level, we tell LibPD to load up the theme’s patch, and each time a dot is connected we send a “playNote” message to LibPD, along with the Midi note(s) that the instrument should play. Not only have we gone from a few 100 kb worth of sound fx files to a few 100 bytes of patchfiles for each world (they add up!), but time is no longer wasted creating (and testing, and recreating) a bunch of nearly identical .wav files.

Gotchas

Of course it’s not all roses, there are a few things we’d like to work on more when we have the time (ha!).

-

Android patch loading. A major reason that the older Unity-LibPD solutions didn’t support android was that libpd couldn’t load patches from the Unity app package. Android uses a URL to reach files within the bundle, while LibPD only knows how to deal with file paths. The common workaround is to just copy all the patches into the filesystem before opening, which we do on the Java side into the app’s

PersistentDataPath. This works! But is kinda hacky, and we currently just guess how long it’ll take before trying to open the patch. -

Windows support There is none. We tried and tried to make Unity recognise the LibPD .dll, but it made me too angry. Nearly all of us in the studio use OSX, so it’s a low priority for us (sorry Ryan).

-

Multiple synthesiser instances LibPD currently only supports one instance running at a time. This means that only a single audio stream can be generated or modified at once. For us this is fine, because only a single sound type is ever active at once. More complicated audio can still be made, but extra care has to be taken to make sure the patches and messages don’t conflict with each other. Also, on the Unity side only a single Mixer can include the UnityPD effect, so you can’t have LibPD generated audio running through different Unity-side FX. There is currently work on the PD side to get instances supported, but it’s still a ways off being stable enough for us.

-

Audio performance LibPD runs relatively well, even on our target mobile devices, at least if you’re measuring based on CPU use. Unfortunately, we have hit audio issues (dropped samples), especially on Android. Certain Android devices are very…optimistic…about the performance of their audio threads, and UnityPD seems to be pushing some of them a bit hard, leading to dropped samples when the audio isn’t generated in time. We’ve managed to mitigate a lot of these issues by increasing buffer sizes, and being frugal about complicated effects, but there might be a better way to deal with this, especially considering how well it runs on iOS, even with a small buffer size (low latency).

TLDR (just gimme)

We’ve made UnityPd available and open source on Github here. Detailed instructions are in the package, but for most projects you can just drop the UnityPd subfolder into your project’s Plugin folder, then add the UnityPd effect to an Audio Mixer. (iOS builds need an extra step to work, read the readme!) After that, you can open .pd patches from the StreamingAssets folder and send messages via the UnityPd.cs binding. See the demo project for details.